MacにHadoopとHiveをセットアップする

MuleからHadoopに接続するのが目的です。 今回はHadoopをセットアップしたいと思います。

環境

OS X El Capitan 10.11.6

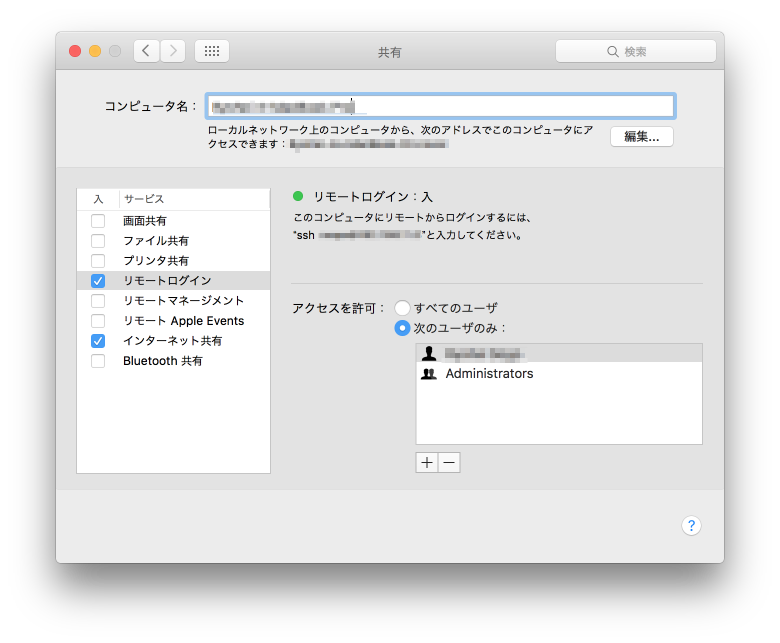

リモートログインをONにしておきます。

Hadoop

install

brew install hadoop

HADOOP_HOMEは/usr/local/Cellar/hadoop/2.7.1/libexecでした。

export HADOOP_HOME=/usr/local/Cellar/hadoop/2.7.1/libexec

設定ファイルの変更

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

フォーマット

hadoop namenode -format

$ hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8

16/09/19 18:56:23 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = ryohei-no-macbook-pro.local/192.168.11.5

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.1

STARTUP_MSG: classpath = 略

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a; compiled by 'jenkins' on 2015-06-29T06:04Z

STARTUP_MSG: java = 1.8.0_45

************************************************************/

16/09/19 18:56:23 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

16/09/19 18:56:23 INFO namenode.NameNode: createNameNode [-format]

16/09/19 18:56:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Formatting using clusterid: CID-2c03a33c-9032-4af1-a0d8-195f92730991

16/09/19 18:56:24 INFO namenode.FSNamesystem: No KeyProvider found.

16/09/19 18:56:24 INFO namenode.FSNamesystem: fsLock is fair:true

16/09/19 18:56:24 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

16/09/19 18:56:24 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

16/09/19 18:56:24 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

16/09/19 18:56:24 INFO blockmanagement.BlockManager: The block deletion will start around 2016 9 19 18:56:24

16/09/19 18:56:24 INFO util.GSet: Computing capacity for map BlocksMap

16/09/19 18:56:24 INFO util.GSet: VM type = 64-bit

16/09/19 18:56:24 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

16/09/19 18:56:24 INFO util.GSet: capacity = 2^21 = 2097152 entries

16/09/19 18:56:24 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

16/09/19 18:56:24 INFO blockmanagement.BlockManager: defaultReplication = 1

16/09/19 18:56:24 INFO blockmanagement.BlockManager: maxReplication = 512

16/09/19 18:56:24 INFO blockmanagement.BlockManager: minReplication = 1

16/09/19 18:56:24 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

16/09/19 18:56:24 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

16/09/19 18:56:24 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

16/09/19 18:56:24 INFO blockmanagement.BlockManager: encryptDataTransfer = false

16/09/19 18:56:24 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

16/09/19 18:56:24 INFO namenode.FSNamesystem: fsOwner = rsogo (auth:SIMPLE)

16/09/19 18:56:24 INFO namenode.FSNamesystem: supergroup = supergroup

16/09/19 18:56:24 INFO namenode.FSNamesystem: isPermissionEnabled = true

16/09/19 18:56:24 INFO namenode.FSNamesystem: HA Enabled: false

16/09/19 18:56:24 INFO namenode.FSNamesystem: Append Enabled: true

16/09/19 18:56:24 INFO util.GSet: Computing capacity for map INodeMap

16/09/19 18:56:24 INFO util.GSet: VM type = 64-bit

16/09/19 18:56:24 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

16/09/19 18:56:24 INFO util.GSet: capacity = 2^20 = 1048576 entries

16/09/19 18:56:24 INFO namenode.FSDirectory: ACLs enabled? false

16/09/19 18:56:24 INFO namenode.FSDirectory: XAttrs enabled? true

16/09/19 18:56:24 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

16/09/19 18:56:24 INFO namenode.NameNode: Caching file names occuring more than 10 times

16/09/19 18:56:24 INFO util.GSet: Computing capacity for map cachedBlocks

16/09/19 18:56:24 INFO util.GSet: VM type = 64-bit

16/09/19 18:56:24 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

16/09/19 18:56:24 INFO util.GSet: capacity = 2^18 = 262144 entries

16/09/19 18:56:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

16/09/19 18:56:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

16/09/19 18:56:24 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

16/09/19 18:56:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

16/09/19 18:56:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

16/09/19 18:56:24 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

16/09/19 18:56:24 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

16/09/19 18:56:24 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

16/09/19 18:56:24 INFO util.GSet: Computing capacity for map NameNodeRetryCache

16/09/19 18:56:24 INFO util.GSet: VM type = 64-bit

16/09/19 18:56:24 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

16/09/19 18:56:24 INFO util.GSet: capacity = 2^15 = 32768 entries

16/09/19 18:56:24 INFO namenode.FSImage: Allocated new BlockPoolId: BP-816378738-192.168.11.5-1474278984811

16/09/19 18:56:24 INFO common.Storage: Storage directory /tmp/hadoop-rsogo/dfs/name has been successfully formatted.

16/09/19 18:56:25 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

16/09/19 18:56:25 INFO util.ExitUtil: Exiting with status 0

16/09/19 18:56:25 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at {Dummy}

************************************************************/

start-all.shで起動

$ $HADOOP_HOME/sbin/start-dfs.sh start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 16/09/19 22:36:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Starting namenodes on [localhost] Password: localhost: starting namenode, logging to /usr/local/Cellar/hadoop/2.7.1/libexec/logs/hadoop-rsogo-namenode-Ryohei-no-MacBook-Pro.local.out localhost: Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 Password: localhost: starting datanode, logging to /usr/local/Cellar/hadoop/2.7.1/libexec/logs/hadoop-rsogo-datanode-Ryohei-no-MacBook-Pro.local.out localhost: Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 Starting secondary namenodes [0.0.0.0] Password: 0.0.0.0: starting secondarynamenode, logging to /usr/local/Cellar/hadoop/2.7.1/libexec/logs/hadoop-rsogo-secondarynamenode-Ryohei-no-MacBook-Pro.local.out 0.0.0.0: Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 16/09/19 22:37:36 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable starting yarn daemons starting resourcemanager, logging to /usr/local/Cellar/hadoop/2.7.1/libexec/logs/yarn-rsogo-resourcemanager-Ryohei-no-MacBook-Pro.local.out Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 Password: localhost: starting nodemanager, logging to /usr/local/Cellar/hadoop/2.7.1/libexec/logs/yarn-rsogo-nodemanager-Ryohei-no-MacBook-Pro.local.out localhost: Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8

バージョンチェック

$ hadoop version Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 Hadoop 2.7.1 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 15ecc87ccf4a0228f35af08fc56de536e6ce657a Compiled by jenkins on 2015-06-29T06:04Z Compiled with protoc 2.5.0 From source with checksum fc0a1a23fc1868e4d5ee7fa2b28a58a This command was run using /usr/local/Cellar/hadoop/2.7.1/libexec/share/hadoop/common/hadoop-common-2.7.1.jar

stop-all.shで停止

$ $HADOOP_HOME/sbin/stop-all.sh This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 16/09/19 22:34:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Stopping namenodes on [localhost] Password: localhost: stopping namenode Password: localhost: stopping datanode Stopping secondary namenodes [0.0.0.0] Password: 0.0.0.0: stopping secondarynamenode Picked up JAVA_TOOL_OPTIONS: -Dfile.encoding=UTF-8 16/09/19 22:34:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable stopping yarn daemons stopping resourcemanager Password: localhost: stopping nodemanager no proxyserver to stop

Hive

install

$ brew install hive

起動

動作確認してデータベース一覧を表示できればOK。

$ hive hive> show databases; show databases OK default

Hiveでデータベースを準備する

前にMac上にセットアップした環境にHiveで環境を作っていきます。

Hiveデータベースを作成

データベースを確認する

hive> show databases; show databases OK default Time taken: 0.567 seconds, Fetched: 1 row(s)

データベースを作成

hive> create database testdb; create database testdb OK Time taken: 0.253 seconds hive> show databases; show databases OK default testdb Time taken: 0.074 seconds, Fetched: 2 row(s)

データベースを選択

hive> use testdb; use testdb OK Time taken: 0.018 seconds

Hiveテーブルを作成

テーブルを確認する

hive> show tables; show tables OK Time taken: 0.211 seconds

テーブルを作成

hive> CREATE TABLE pokes (foo INT, bar STRING); CREATE TABLE pokes (foo INT, bar STRING) OK Time taken: 0.581 seconds hive> show tables; show tables OK pokes Time taken: 0.075 seconds, Fetched: 1 row(s)

作成したテーブル定義を確認

hive> describe pokes; describe pokes OK foo int bar string Time taken: 0.588 seconds, Fetched: 2 row(s)